“Bureaucracy Does Its Thing: Institutional Constraints on U.S.-GVN Performance in Vietnam.” by R.W. Komer. Rand Corporation, August, 1972. https://www.rand.org/content/dam/rand/pubs/reports/2005/R967.pdf

“Bureaucracy Does Its Thing: Institutional Constraints on U.S.-GVN Performance in Vietnam.” by R.W. Komer. Rand Corporation, August, 1972. https://www.rand.org/content/dam/rand/pubs/reports/2005/R967.pdf

Readers who have served in Iraq or Afghanistan, but particularly Iraq, would be wise to lay in a stock of their favorite adult beverage before diving into Robert Komer’s first-hand study of institutional inertia in the Vietnam War. In his final chapter, Komer makes the accurate and vital point that the past does not provide a foolproof template for the future. That said, the past often provides a far better sense of what will not work that what will. Iraq veterans are likely to respond to Komer’s study with a fair degree of rage.

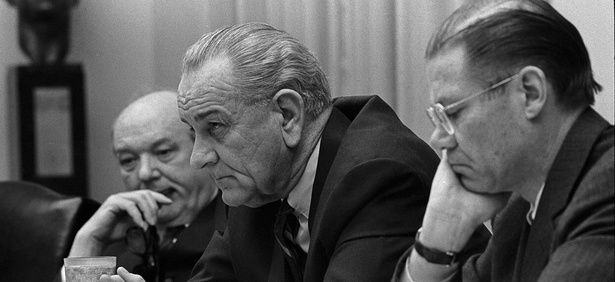

Known as “Blowtorch Bob” for his direct, unfiltered manner, Robert Komer served in Vietnam from 1967 to 1968 as head of the Civil Operations Revolutionary Development Support (CORDS) program. Having served on the National Security Council before Vietnam, including a stint as interim National Security Adviser to President Johnson, he was appointed Ambassador to Turkey in 1968. Though his in-country tenure was brief, it covered a critical period in the Vietnam War and followed years of direct involvement in Vietnam policy in Washington. Although CORDS was responsible for the “hearts and minds” campaign, Komer was no soft-hearted do-gooder. CORDS had both military and civilian staff, fell under Military Assistance Command – Vietnam, and was responsible for over 20,000 deaths through the Phoenix program of targeted assassinations.

In 1971, the Advanced Research Projects Agency, through the Rand Corporation, commissioned Komer to write a study of the ways institutional constraints and characteristics affected U.S. and Vietnamese prosecution of the Vietnam War. Komer’s overwhelmingly caustic and pessimistic assessment is even more remarkable for having been written three years before the final collapse of the South Vietnamese government. Nobody reading this study in 1972 should have been the least bit surprised when North Vietnamese tanks rolled into Saigon.

Komer made four observations that should give pause to anyone advocating for direct U.S. involvement in a civil war or insurgency in a distant country with an alien culture.

- “[T]o a greater extent than is often realized, we recognized the nature of the operational problems we confronted in Vietnam, and…our policy was designed to overcome them.” (v)

- Official U.S. policy directed a counterinsurgency response that never fully materialized on the ground; both U.S. and Vietnamese military leaders employed conventional military tactics regardless of policy guidance.

- “[The U.S.] did not use vigorously the leverage over the Vietnamese leaders that our contributions gave us. We became their prisoners rather than they ours; The [Government of Vietnam] used its weakness far more effectively as leverage on us than we used our strength to lever it.” (vi)

- The various agencies operating in Vietnam, regardless of the circumstances and guidance, performed their “institutional repertoires” with disastrous results.

Along the way Komer provides important insights into the questions of organization, civil v. military authority, and other tactical and procedural issues that played a role in the final outcome.

In the years since the end of the Vietnam War, a common picture has emerged of falsified or rose-colored reporting, inappropriate metrics (i.e. body counts), and relentless, unjustified optimism on the part of military leaders in Saigon. Komer strongly challenges that picture. While there are any number of examples of false or misleading reporting, and while the public benchmarks for success were unquestionably inappropriate, Komer makes a compelling forest/trees argument predicated largely on the record in The Pentagon Papers. Sure, battalion and brigade commanders may have inflated their body counts and pencil-whipped reports on “pacification,” but Komer argues that policy makers in Washington were well aware of the deteriorating situation in Vietnam, and that they recognized the causes of that situation. No matter how many fake trees may have been reported, the White House knew the forest was on fire.

Aside from accentuating the positive, official reporting tends to suffer from an obsession with quantification. Consequently, the government privileges indicators that can be quantified without regard to their relevance. In campaigns like Vietnam and Iraq, where there is no clear enemy order of battle, information that can be counted is often meaningless, and the most important information is often subjective, intuitive, and constantly shifting. When public support is the key to success, effective polling is the obvious measure of choice. Unfortunately, effective polling relies on a whole suite of conditions that do not exist in a war zone–accurate census data and security for pollsters first and foremost.

The situation is further aggravated when the metrics change constantly. Following the Vietnam War, the U.S. military reached the obvious conclusion that individual replacement every twelve months shattered unit cohesion, deprived the force of institutional knowledge, and created vulnerabilities. Unfortunately, DoD was unwilling, or politically unable, to come to grips with the implications of those observations. While the military eliminated individual rotations, it simply replaced them with unit rotations, thereby improving the unit cohesion problem at the expense of making the institutional knowledge problem even worse. At least under individual replacement, only about 1/12 of the force rotated in a given month. Under unit replacement, nearly all institutional knowledge would depart a given operational area within a week.

The rotation of units, combined with the careerist pressures of the military, caused each incoming unit in Iraq to select a largely new set of metrics to assess progress. Incoming units established new metrics, determined that their predecessors had left a mess, then spent 12 (or later 15) months working to improve the new metrics, at which point they rotated out and the cycle repeated. Ironically, this cycle of mismeaurement and misinformation did not require unethical or inaccurate reporting by anyone. Incoming units really did find a mess because the situation was generally deteriorating. They not unreasonably determined that their predecessors were both failing to improve the situation and measuring the wrong indicators. In setting new indicators, incoming units were careful to select factors that they could both affect and measure, knowing that failure to accomplish their goals would be career-ending for their commanders. Units were therefore able to improve their metrics while the situation around them worsened.

Komer’s articulation of similar dynamics in Vietnam over three decades earlier raises the obvious question, and accusation, why did we not learn? Why did we repeat the errors of our fathers?

This brings us to the central premise of Komer’s study and his most valuable insight. Large, bureaucratic organizations do what they are organized, resourced, and trained to do. They are generally very good at dealing with clearly defined, recurring problems. They are not good at adapting to new, poorly defined problems. Even when the characteristics of a new problem become clear and the solution is visible, existing organizations will not adapt unless shaken by a disaster and threatened with destruction–sometimes not even then.

To understand the military’s failure to adapt in Vietnam it is essential to bear in mind both its antecedents and its contemporaries. The generals who led in Vietnam mostly began their careers in World War II, a conflict of firepower, linear tactics, and large-unit engagements. They followed World War II with Korea, where effective conventional tactics eventually worked to achieve a stalemate that favored the U.S. and its South Korean allies. The highly superficial commonalities between Korea and Vietnam–wars against Asian Communists from northern rump states–caused U.S. military leaders to make a category error. Meanwhile, the war in Vietnam was never the main show for the U.S. military. Throughout the conflict, the U.S. military’s primary role remained deterrence of the Soviet Union, necessitating highly conventional force design, training, equipping, and doctrine.

This is the point that Komer drives home again and again. Bureaucracies “perform their institutional repertoires” and the institutional repertoire of the U.S. military, particularly the U.S. Army, was big unit wars against conventional enemies.

Komer’s study focuses primarily on the U.S. bureaucracy and the Vietnamese bureaucracy through the lens of its interaction with the American, and he clearly believes at a very basic level that better performance would have resulted in better outcomes. Nevertheless, he acknowledges the possibility that the problem was unsolvable and articulates the fundamental dilemma of great power counterinsurgency over and over.

The GVN’s performance was even more constrained by its built-in limitations than that of the U.S. In the last analysis, perhaps the most important single reason why the U.S. achieved so little for so long in Vietnam was that it could not sufficiently revamp, or adequately substitute for, a South Vietnamese leadership, administration, and armed forces inadequate to the task. The sheer incapacity of the regimes we backed, which largely frittered away the enormous resources we gave them, may well have been the greatest single constraint on our ability to achieve the aims we set ourselves at acceptable cost. (vi)

Quite simply, if the local government were not a complete mess, it would not need great power intervention in the first place. There is a disparity of motivation between the great power and the insurgent, but there is an equally large disparity between the great power and the supported government. A “victory” that requires the ruling class to surrender its power, wealth, or ideology is not a victory in their eyes, but civil wars and insurgencies rarely gain traction in societies with equitable distribution and representation.

Veterans of Iraq and Afghanistan will see clear shades of Baghdad and Kabul in Komer’s assessment of the GVN. Indeed, T.E. Lawrence’s 15th article points to his understanding of both the inherent problem and the difficulty, for effective soldiers, in overcoming it:

Do not try to do too much with your own hands. Better the Arabs do it tolerably than that you do it perfectly. It is their war, and you are to help them, not to win it for them. Actually, also, under the very odd conditions of Arabia, your practical work will not be as good as, perhaps, you think it is. (http://www.pbs.org/lawrenceofarabia/revolt/warfare4.html)

Lawrence was uncharacteristically humble in circumscribing his advice to the culture he knew well, but the same basic dynamic was at work in Indochina. The frustration of watching poor or corrupt performance is simply too much for the average western military professional, and yet poor and corrupt performance will be the standard in any nation requiring outside assistance. It is a paradox understood by experienced counterinsurgents (and parents of teenagers) that providing less assistance can engender better performance. Komer points out that President Johnson’s limitations on troops and bombing following the “Tet shock” forced “the GVN and ARVN at long last to take such measures as manpower mobilization and purging of poor commanders and officials. After Tet 1968, GVN performance improved significantly” (142). Komer’s observation of bureaucratic behavior and limitations combined with the paradoxical realities of counterinsurgency pose a cautionary tale for anyone contemplating intervention.

The U.S. military of 1965 (or 2001) was an enormous organization, run through almost comical levels of bureaucracy by necessity. There is simply no other known method of organizing and operating a worldwide organization requiring millions of people and billions of dollars. Contrary to the mythology of Hollywood and Washington, DC, bureaucracies are not made up of or run by mindless drones, imagining new forms to require so they can be left in peace to enjoy their donuts and cigarette breaks. Bureaucratic leaders tend to be thorough, energetic, optimistic, and ambitious, and they are generally highly reliant on rules and order. They advance by closely following established procedures and avoiding embarrassment. When intervening in a civil war or insurgency, we place such leaders in a chaotic situation in which they have little control over actions or outcomes and are surrounded by people whom they see as incompetent, corrupt, and deceitful. Because their careers depend upon providing measurable results and avoiding embarrassing failures, they have strong incentives to gain control of the immediate tactical situation and to emphasize areas in which they can control the variables. In other words, they are exactly the wrong people to operate in the roundabout, oblique manner that Lawrence recommended.

The problem is aggravated by the mismatch between rhetoric and reality. During the Vietnam War, the U.S. government claimed that maintaining a friendly South Vietnam was a vital U.S. national security interest, but it never acted in a way that supported that claim. In both the Civil War and World War II, the U.S. Army enacted tectonic shifts in its manner of operations, admittedly facing resistance at every turn, because the unprecedented threats provided the impetus to overcome bureaucratic inertia. The Army’s approach to Vietnam (and Iraq and Afghanistan) demonstrated that its leaders did not believe their own dire assessments of the war’s importance. The Army failed to alter assignment policies or organization in ways that would have materially improved its efforts, even when those changes were identified. Moreover, the Army’s inertia may have been a rational response to the situation. The U.S. faced the Soviet Union in a peer competition that threatened the destruction of human civilization, while the 1975 collapse of the South Vietnamese regime with almost no significant consequences for the United States proved that the threat there had been badly overblown. Organizing to fight a conventional, mechanized war against regular units was precisely what the United States should have been doing in the 1960s.

Recent work by Walter C. Ladwig, III (“Influencing Clients in Counterinsurgency: U.S. Involvement in El Salvador’s Civil War, 1979–92,” International Security, Volume 41, Number 1, Summer 2016, pp. 99-146) raises the possibility of alternative approaches that do not rely so heavily on the adaptability of rigid military bureaucracies and do not commit U.S. prestige so decisively. Inherent in the more indirect approach, however, is the willingness to fail. While “failure is not an option” is rarely actually spoken in the military, the phrase “we don’t plan for failure” is common. Planning for or accepting the possibility of failure is anathema to the successful military officer in the same way that standing by and watching local partners accept bribes or perform incompetently is. However, the willingness to fail is a basic necessity for U.S. intervention in wars of choice if we do not wish to keep repeating the mistakes of Indochina, Afghanistan, and Iraq.

Once we accept the premise that the outcomes in places like Vietnam and Iraq can be desirable without being essential, we can correct the category error that has led us to excessive, but ultimately failed, full-scale military intervention. A civil war in a strategically useful but not essential country on the far side of the world may be a war for the people who live there without being a war for the United States. That is not to say there will not be violence or U.S. casualties–both occur often without being deemed a war–but they will not require mobilization of the nation or national commitments of prestige to the point that the U.S. cannot accept failure. Rather than view them as wars, U.S. decision makers can view them as investments, and just as with an investment they can define what risk they are willing to take and build an alternative plan for failure.

Komer describes the failures of the various command structures throughout the Vietnam conflict. Despite the recognition that the conflict was primarily political, the military always ended up in charge because it provided the bulk of the resources. Ambassador Henry Cabot Lodge was allergic to managing, and Ambassador Maxwell Taylor, who seemed to be the perfect “civilian” to merge the various efforts and subordinate the military, instead deferred to the generals and the military command structure from which he had sprung. In his conclusion, Komer argues that ad hoc organizations may be superior to repurposing existing organizations because they will not be so wedded to “institutional repertoires.” He maintains that such ad hoc organizations were generally effective in Vietnam, but it is worth noting that the Coalition Provisional Authority that nominally ran the early campaign in Iraq was a conspicuous failure. Theory and common sense would tell us that a strong ambassador is the right person to head such an effort, but history gives us reason for caution. This dynamic might be more functional in an environment with less military presence–the overwhelming resource disparity lends power to the military chain of command that civilian agencies find hard to overcome. A more indirect approach might reduce that inequality by lowering the requirement for and value of military contributions.

Regardless of the command structure, it is essential to identify measures of both performance and effectiveness, determine the indicators and resource collection, and then follow the evidence to legitimate, no matter how unwelcome, conclusions. Komer devotes extensive space to assessing the assessments and reaches the conclusion that an external review is a necessity. In Vietnam the Office of the Secretary of Defense (OSD) conducted, in Komer’s view, excellent analysis, but the Joint Chiefs of Staff formally objected, twice, to OSD analyzing military performance in the field. In Vietnam, MACV consistently analyzed the wrong data because their theory of the war was wrong. In line with fighting a conventional war, they developed conventional order of battle metrics that failed to capture meaningful information. This is one lesson the U.S. military learned between 1975 and 2003 but did not follow to an effective solution. In Iraq, U.S. forces attempted to measure factors they associated with counterinsurgency, but DoD and the theater commanders never agreed on a standardized set of metrics, and they never resourced collection of the most relevant, and difficult to collect, data regarding public opinion. Consequently, incoming units frequently designed new metrics and started from new baselines, providing a hodgepodge of data covering 12, 15, and 9-month increments but useless for long-term comparisons.

While the ideal command arrangement is open to debate, the need for external, objective analysis is clear. In future, an honest broker, unbeholden to the chain of command, must collect and analyze relevant data across the entire duration of U.S. involvement in any conflict. Such an organization must be resourced to collect the necessary data regardless of cost or difficulty. Just as a venture capitalist would not commit funds to an enterprise without identifying indicators of success or without access to vital information, the U.S. cannot blindly commit itself without the ability to judge its own performance. Such an independent analytical organization, paired with an effective “red team” to challenge assumptions about the opposition might provide leaders with the necessary information to make hard decisions.

It is certainly true that history does not repeat itself–one of Komer’s key points is that each national situation is unique–but history does highlight institutional weaknesses that can operate similarly across multiple situations if not corrected. Bob Komer’s study of institutions in Vietnam is likely to strike a chord with anyone who has spent more than a week working in a bureaucracy, and it is likely to resonate painfully with those who watched the U.S. military flounder in Iraq and Afghanistan. It often leaves the impression that U.S. counterinsurgency theorists skimmed his chapter on possible viable alternatives without bothering to place it in the context of the entire report. Komer’s observations provide a devastating view of the inherent obstacles to great power intervention, and the history of such adventures since 1972 offers little reason to believe we can overcome them.

The Bedford Boys: One American Town’s Ultimate D-day Sacrifice. by Alex Kershaw. MJF Books, 2003. ISBN: 978-1-60671-135-4. 274 pages.

The Bedford Boys: One American Town’s Ultimate D-day Sacrifice. by Alex Kershaw. MJF Books, 2003. ISBN: 978-1-60671-135-4. 274 pages. Churchill and Orwell: The Fight for Freedom by Thomas E. Ricks. Penguin Press, 2017. ISBN: 9781594206139. 339 pgs.

Churchill and Orwell: The Fight for Freedom by Thomas E. Ricks. Penguin Press, 2017. ISBN: 9781594206139. 339 pgs. Fast Tanks and Heavy Bombers: Innovation in the U.S. Army 1917-1945 by David E. Johnson. Cornell University Press 1998. ISBN: 0-8014-8847-8. 288 pgs.

Fast Tanks and Heavy Bombers: Innovation in the U.S. Army 1917-1945 by David E. Johnson. Cornell University Press 1998. ISBN: 0-8014-8847-8. 288 pgs.

The Ideas Industry: How Pessimists, Partisans, and Plutocrats are Transforming the Marketplace of Ideas by

The Ideas Industry: How Pessimists, Partisans, and Plutocrats are Transforming the Marketplace of Ideas by As the Trump administration moves forward on, or perhaps we should just say towards, its strategy for Afghanistan, the various tribes of the foreign policy and political establishment seem no closer to consensus than they have been since at least 2008. In our sixteenth year of war the lack of consensus indicates a lack of understanding and should stand as an enormous caution to all of us. On Thursday Sameer Lalwani published

As the Trump administration moves forward on, or perhaps we should just say towards, its strategy for Afghanistan, the various tribes of the foreign policy and political establishment seem no closer to consensus than they have been since at least 2008. In our sixteenth year of war the lack of consensus indicates a lack of understanding and should stand as an enormous caution to all of us. On Thursday Sameer Lalwani published  Why We Lost: A General’s Inside Account of the Iraq and Afghanistan Wars

Why We Lost: A General’s Inside Account of the Iraq and Afghanistan Wars Postwar: A History of Europe Since 1945 by Tony Judt.

Postwar: A History of Europe Since 1945 by Tony Judt.